On this page, we want to initially discuss some more theoretical concepts that may underlie potentially rather different approaches to syntax before talking about some more practical applications and problems in syntactic analysis.

As we have seen on the previous page, sometimes there is agreement between different constituents, but we may also find cases of agreement within one and the same constituent. In this case, one of the words in the constituent is usually assumed to govern the behaviour of the other(s) and is therefore referred to as the head of the phrase. In NPs, this is usually deemed to be the noun, in VPs the finite verb and in PPs the preposition. Let’s try to exemplify this by using variants of the NP the tall tree. First of all, we can immediately recognise the fact that the adjective tall may be deleted without making the NP any less well-formed, which is why the adjective can clearly not be considered the controlling element in the NP. Now, if we form the plural of the NP, we either arrive at the form the tall trees or simply tall trees, where the article/determiner may be dropped in the latter, so that it is also very unlikely to be the head. And last, but not least, we may also change the determiner itself, yielding a tall tree, instead of the tall tree, an option we only have with the singular noun, whereas the plural form constrains us to the use of the determiner the, if we have one at all.

Using the earlier example units The two friends went on a trip to Edinburgh. and This is a very good example of a sentence., identify the different heads contained in the phrases they contain. Don’t forget that some phrases may contain more than one head!

A similar kind of controlling function is also often seen in the behaviour of verbs, which force us to have a certain number of (usually nominal) constituents within the syntactic unit. In more traditional types of grammar, this is often discussed under the heading of transitivity, where we can distinguish between four different types:

A more modern idea is that of valency, usually associated with the French linguist Tesnière. Valency, a term originally borrowed from chemistry, describes the number of actants (or arguments) involved in the functioning of a verb. This number may be given in- or excluding the subject, but we’ll adopt the former method. Thus, a verb that is traditionally regarded as intransitive would have a valency of 1, a mono-transitive one of 2 and a di-transitive one of 3. The number without the subject describes the number of complements necessary to make the construction involving the particular verb well-formed. Relating this to the examples above, we would have the following values for categories 1) - 3):

| transitivity | valency | complements |

|---|---|---|

| intransitive | 1 | 0 |

| mono-intransitive | 2 | 1 |

| di-intransitive | 3 | 2 |

Other phrases that are not required for this purpose, but simply provide additional information, are referred to as adjuncts, as in she gave him a present at 10 o’ clock in the morning, where the PP at 10 o’ clock in the morning is the adjunct.

Using the earlier example units She ran a mile yesterday. and The two friends went on a trip to Edinburgh., see whether you can easily determine what their transitivity is, as well as whether the non-subject phrases represent complements or adjuncts.

Many syntactic theories seem to assume that a declarative structure is the syntactic default or ‘prototype’ and that all other syntactic structures are derived from it by moving (or removing) constituents and adding relative or question markers in appropriate places. In earlier generative theories, this was seen as being performed via syntactic transformations, a concept that has by now been abandoned in most theories, but will be illustrated briefly below in conjunction with deep structure. A certain amount of reordering of constituents, however, still seems to be assumed in most theories and is reflected in the idea of traces, i.e. syntactic slots left empty after something has been moved from them.

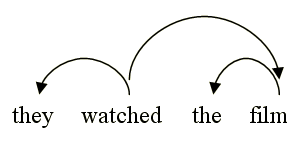

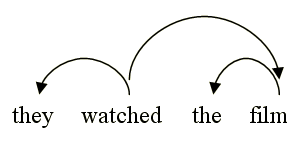

Noam Chomsky and his followers initially also assumed that sentences had an underlying deep structure, a kind of logical structure often similar to a declarative one, which reflects the semantic content of a sentence, and which may be quite different from the surface structure eventually generated when we in fact produce this sentence. An example of this can be seen in the table below:

| structure type | subj. | infl. | pred. | obj. | |

|---|---|---|---|---|---|

| surface (declarative) | they | watched | the film | ||

| deep structure | they | (past) tense | watch | the film | |

| surface (yes/no question) | did | they | e | watch | the film |

In this example, we can see that the deep structure assumes that an inflectional (infl.) element, representing the tense marking, is present in the syntactic position preceding the predicate. In the surface structure for the declarative, this would be made explicit in the past tense suffix {-ed}, attached to the predicate watch. In the interrogative surface structure, the infl. element is realised as a past tense form of the auxiliary do, which ‘swaps’ its position with the subject, leaving an empty element (e) or trace behind in its original position.

However, the focus of many theories seems to have shifted away from this notion somewhat and there now seems to be a much greater emphasis on specifying/describing grammatical roles/functions of and for the individual constituents of a syntactic unit.

A further strong focus of many modern theories has also been the explanation of language acquisition and especially aspects of universality in this.

As the name generativism implies, this theoretical approach is based on the idea of generating syntactic units from a limited set of syntactic rules. An example for this is the simplified expansion of the earlier example unit they watched the film:

Each time a constituent is replaced by a terminal element (i.e. a word) in our example, the constituent is greyed out and cancelled.

Dependency, on the other hand, is based on identifying and describing the controlling elements, i.e. heads, in syntactic units and how they relate to one another. As such, it tries to interpret especially the argument structure of verbs in more detail and may therefore provide different structural descriptions from the typical phrase structure descriptions we find in generative grammar.

Parsing is the act of assigning a structural description to a syntactic unit. It is used in IT in order to provide the basis for the following semantic analysis in e.g. dialogue systems, question-answering systems, etc. In linguistic research, it is used in order to identify and analyse complex syntactic structures or phenomena, as well as for extracting lexical, grammatical or semantic information from corpora.

The parsing process itself can be quite complex, which is why if we only want to identify constituents for further classsification, we can employ a simpler type of parsing, called chunk parsing, which initially only identifies easily identifiable constituents, rather than generating a whole tree, and then tries to ‘add them together’ later.

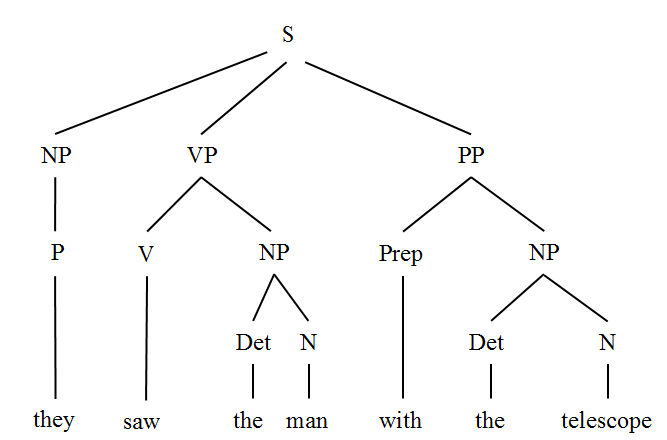

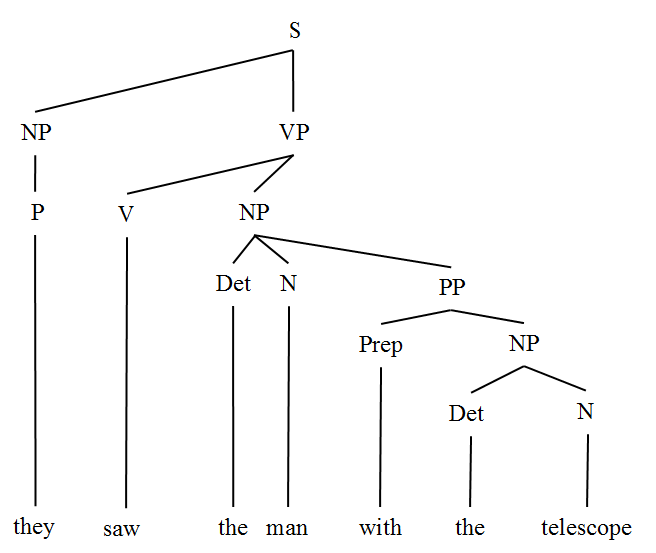

Ambiguous syntactic structures may present a big problem for assigning a structural description to syntactic units, which is why they represent an important field of study in syntax.