Semantics (from Gr. σημαντικός = meaningful ⇐ σήμα = sign) is the study of (‘grammatical’) meaning in language. In studying semantics, we can draw a distinction between the meaning(s) attributed to the individual word and that/those in or of larger syntactic units.

The study of word meaning constitutes the more traditional approach to semantics which can be traced back to Aristotle and assumes that it is possible to categorise words (or concepts) according to sets of necessary and sufficient features. It is essentially ‘paradigmatic’ in nature, as it contrasts the semantic content of individual words in terms of their individual meaning components, as we shall see further below. For that purpose, it applies a technique known as componential analysis, which attempts to identify salient features of meaning (in analogy to the features we’ll later encounter when we talk about phonology).

In contrast to this, the analysis of meaning in context can be seen as more ‘syntagmatic’. Here, sometimes a further distinction is made between ‘sentence meaning’ and ‘utterance meaning’, where the former refers to the literal meaning of the words as they are uttered and the latter to their meaning in context, i.e. how they are meant to be interpreted in this particular context. However, the second type may also be seen as belonging to the realm of pragmatics, rather than semantics. We’ll largely focus on the analysis of word meaning in our discussions, although we’ll also look at some issues in contextual meaning.

We talk of two words being synonymous when they basically express the ‘same’ meaning. This variety of different expressions may have different reasons or motivations, as you’ll be able to see when you look at the brief list below:

When two words behave in exactly the same way in all contexts, we have total synonymy. This may be deemed true for what has been labelled ‘dialectal’ synonymy above, but for almost all other cases, we can probably only assume partial synonymy. The negative counter-part to synonymy is called antonymy and will be described in more detail later.

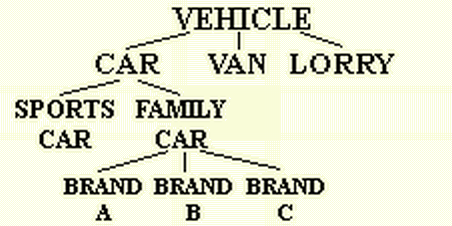

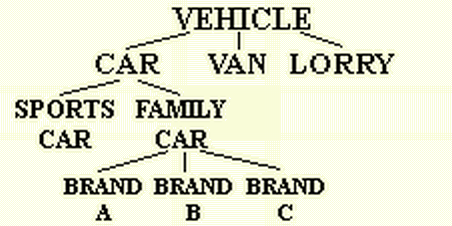

In order to describe the meaning of individual words, it is very useful to be able to group them into classes or categories. Each class/category may in turn be divided into a number of sub-classes or sub-categories. Categories are often based on general classification systems used in everyday language (folk categories), but may also be based on technical classification systems (expert categories). Within each categorisation scheme, there is usually a hierarchy, where some words are deemed to belong to superordinate and some to subordinate categories, with the subordinate elements usually being specific types of the superordinate categories. Words that are used to label superordinate categories are usually referred to as hypernyms (also hyperonyms; {hyper} = above) and those that belong to subordinate categories are known as their hyponyms ({hypo} = below). Thus the relationship between a hypernym and its individual hyponyms is usually an is-a relationship, where the hyponyms are specialised types of the hypernym. Therefore car and lorry would be hyponyms of vehicle and conversely vehicle the hypernym of car and lorry.

The relationship between hypernyms and hyponyms is also often represented in the form of a tree, similar to those in syntax, e.g.

Words that appear at the same level within the hierarchy are called co-hyponyms, e.g. sports car and family car in our case. A classification scheme like the one above that groups words together is known as a taxonomy.

Try to develop a taxonomy for living beings and justify your choices as to the different levels you may want to postulate.

Basic semantic features are a means of classifying individual properties of words, i.e. conducting componential analysis, so that these can be compared to or contrasted with one another. Feature classification systems are usually binary (i.e. have only two values, + & -), but may sometimes also specify a (third) neutral value (0). Some typical/often used features are:

And examples where these features have been applied are:

Leech, G. 1981. Semantics: the Study of Meaning (2nd ed.). London: Penguin.

Taylor, J. 1995. Linguistic Categorization: Prototypes in Linguistic Theory (2nd ed.). Oxford: OUP.