Corpus linguistics essentially is a methodology for working with linguistic data. Although many people may see it purely as the investigation of linguistic phenomena by means of written & spoken corpora and using various types of software, it also often involves the compilation & annotation of such collections of text.

Corpora are collections of spoken or written texts, compiled for linguistic investigation and according to particular criteria that make them relevant for either general or specific research on language. Linguistic corpora these days tend to be stored in electronic form, so that they can be searched through and analysed easily and efficiently. The earliest electronic corpora of written language used to contain one million words, while those of spoken language were generally much smaller, but modern reference corpora, such as the British National Corpus (BNC), already contain up to 100 million words or more and often comprise both written and spoken language. For further information, you can take a look at my page on general corpora, which lists some of the most important ones and provides some further information, too.

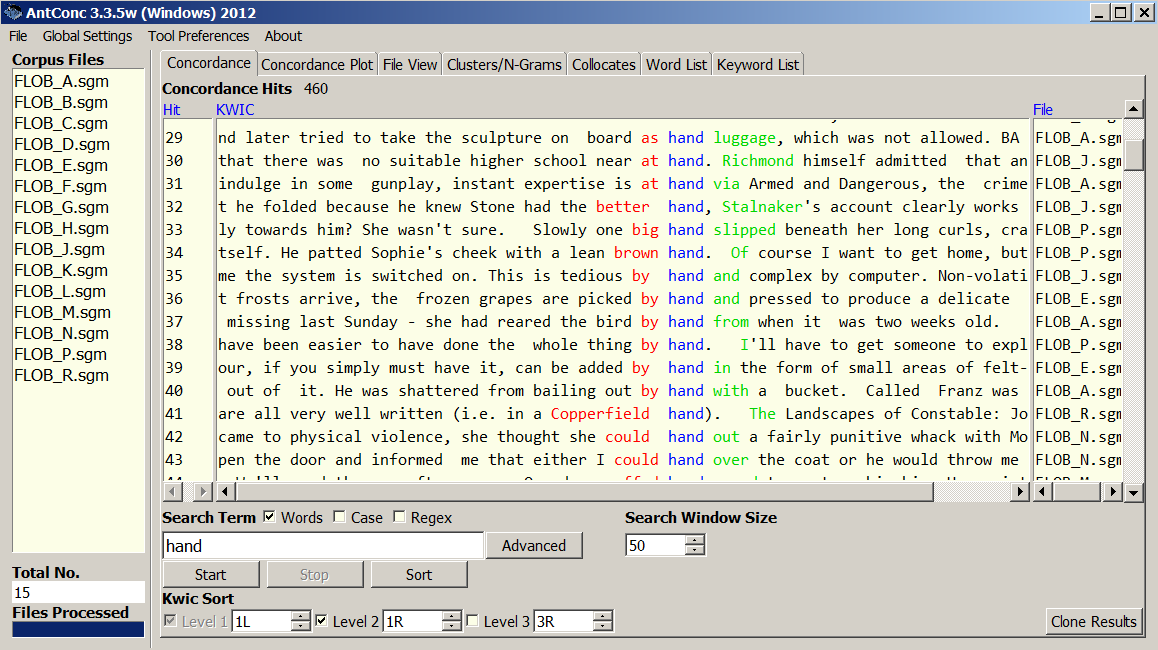

One of the most important tasks in corpus linguistics is to search text corpora for illustrative samples of linguistic features and retrieve them. Although there are also more computational methods of retrieving and processing such data, this is usually achieved by means of a concordance program or concordancer, for short. A concordance is a listing of an individual word or search term, usually given in connection with a line number and the name of the file it was retrieved from. The most common format for such a listing is the Key Word In Context (KWIC) format, where the search term is shown in the centre of the output window, with a variable or fixed length context on either side of it. The following graphic provides an illustration of a concordance, produced with the excellent freeware concordancer AntConc.

In the example of the search term hand, you can see the list of files that were used on the left hand side. In the centre window, you find the KWIC concordance display of the search term, sorted according to the word immediately occurring to its left and then immediately to its right, and with a context of 50 characters on either side. The fact that a specific sort order has been used is illustrated by the colour coding. In the smaller windows next to the concordance window, the number of the individual occurrence (hit) appears to the left of the relevant concordance line and the name of the file to the right of it. If the list were unsorted, the hit numbers would all be consecutive.

For work with the British National Corpus (BNC), you can use the free web access version provided at Lancaster University, for which you first need to register here.

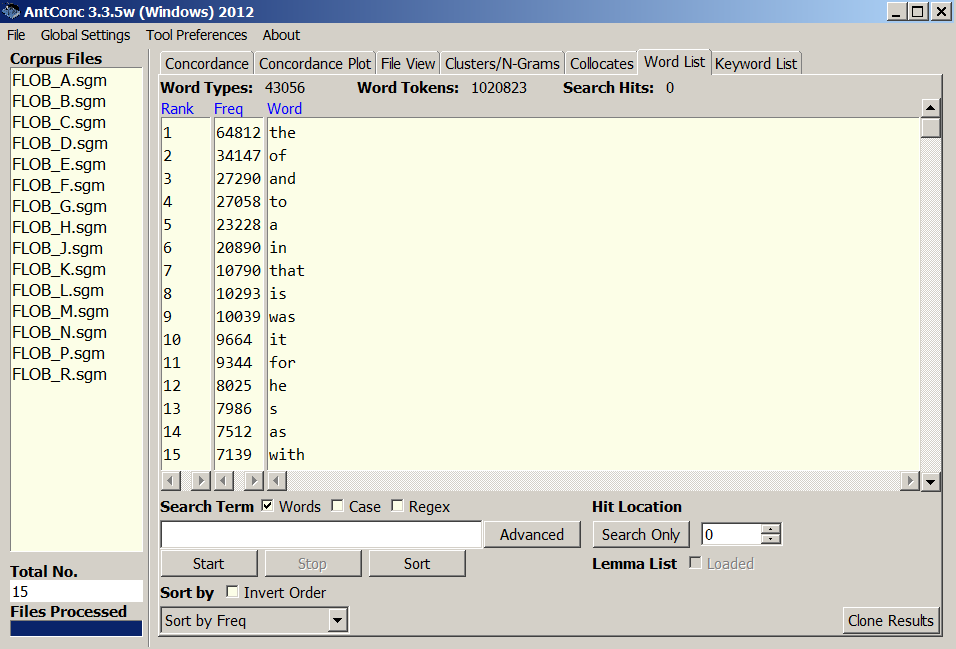

Another very frequent task in corpus linguistics is to create word frequency lists. These can be used to analyse the vocabulary contained in a text or corpus, e.g. in order to find domain-specific vocabulary or to attempt authorship identification for works that cannot easily be attributed to a particular author.

As you can see in the example illustration above, word lists are usually sorted in order of descending frequency, so that the most frequent words tend to occur at the top of the list. However, another thing that the illustration shows quite clearly is that the most frequent words in most corpora tend to be function words, and therefore do not usually contribute much to the meaning of the text(s). This is why programs that generate frequency lists often provide the user with the option of creating lists of so-called stop-words, i.e. words that are excluded from the analysis.

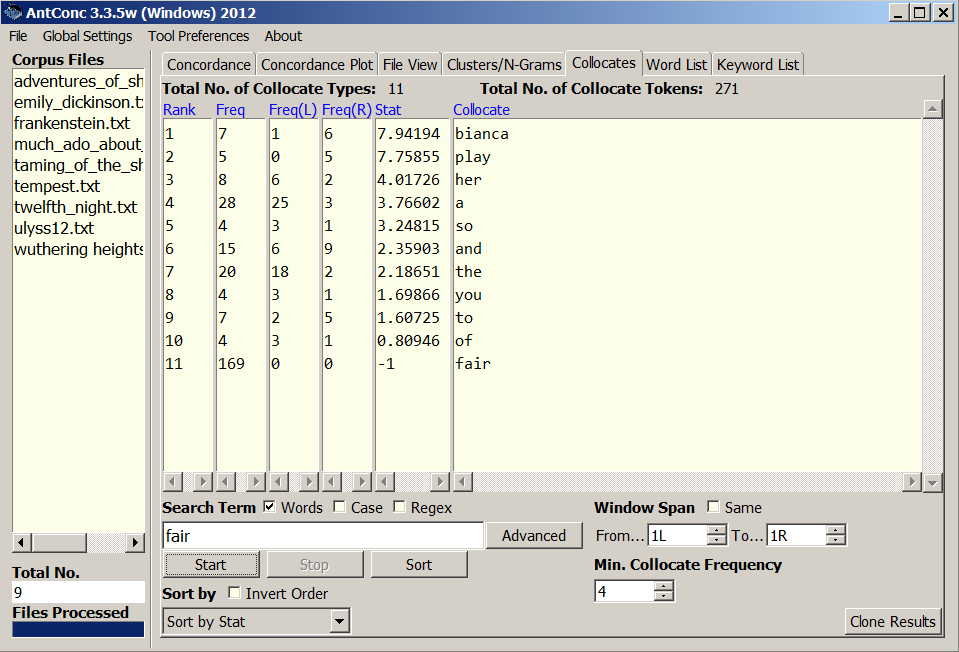

We have already learnt what collocations are when we talked about meaning in context in our discussion of semantics. The illustration below shows how AntConc can be used in order to compute collocates, or rather what kind of output its collocational anlysis, based on a mutual information score, produces.

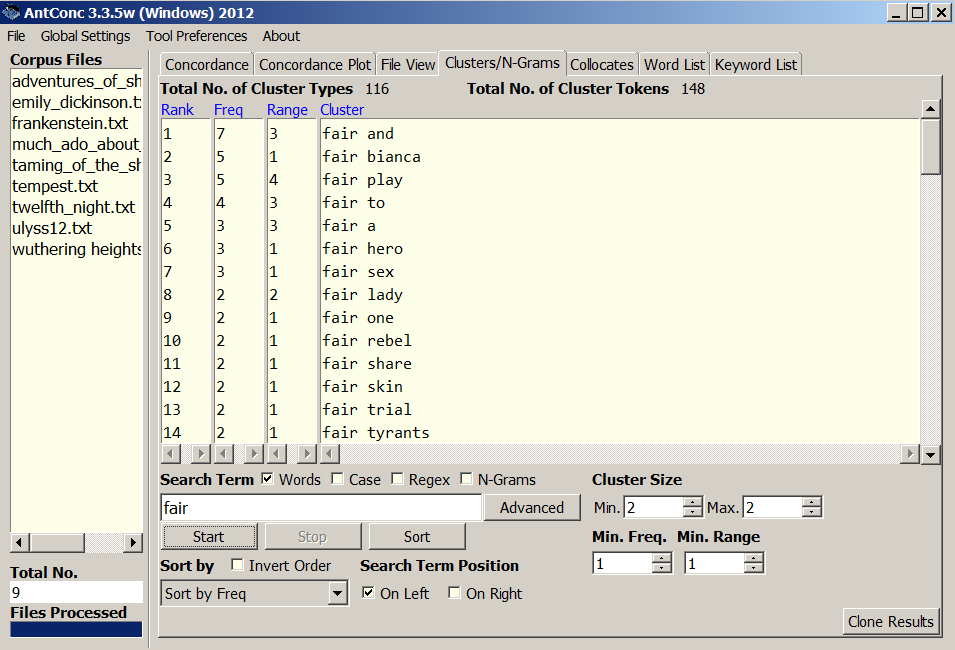

The results demonstrate that we usually again find many function words among the most likely collocates of any content word, such as the word fair in our example, but that in terms of neighbouring content words (i.e. true collocates), we not only find the commonly cited example of play, but also a number of (downcased) names that obviously come from the Shakespeare plays in the ad-hoc corpus used to produce the examples.

In some cases, however, a clustering approach according to n-grams (in our case, n number of words in a row), such as the one also provided by AntConc, can already show close collocations much more clearly, as the following illustration demonstrates.

Barnbrook, Geoff. (1996). Language and Computers. Edinburgh: EUP.

Kennedy, G. (1998). An Introduction to Corpus Linguistics. London: Longman.

McEnery, T. & Wilson, A. (2001). Corpus Linguistics (2nd ed.). Edinburgh: EUP.